Summary: Since Reddit did not work well with our RSS feed block, despite its slow request limit and a new improved detection of 429 responses, Pipes now got a dedicated block to access subreddit feeds. That new block uses an rss-bridge instance to solve the problem.

As far as social media sites go Reddit is one of the accessible ones. Not only is the activity on the site visible without an account, Reddit even provides RSS feeds for the submissions to their subreddits. That is very useful especially for sites like Pipes that work well with RSS items.

But until recently Pipes and Reddit did not work well together. When importing a Reddit feed into a pipe, it sometimes worked and sometimes did not. It turned out that the Reddit servers again and again would refuse to serve content when Pipes asked.

That was surprising because at that time our downloader looked like this (slightly simplified):

require 'open-uri'

require 'lru_redux'

# Download and cache downloads. Limit requests to the same domain to not spam it

class Downloader

def initialize()

begin

@@limiter

rescue

@@limiter = LruRedux::TTL::ThreadSafeCache.new(1000, 2)

end

end

def get(url, js = false)

url = URI.parse(URI.escape(url))

result, date = Database.instance.getCache(key: 'url_' + url.to_s + '_' + js.to_s)

if date.nil? || (date + 600) < Time.now.to_i

while (@@limiter.key?(url.host))

sleep(1)

end

@@limiter[url.host] = 1

result = URI.open(url, :allow_redirections => :all).read

Database.instance.cache(key: 'url_' + url.to_s, value: result)

end

return result

end

end

That code did some effort to avoid spamming other sites:

- It caches every downloaded URL for 10 minutes, checking the date an URL was cached with

if date.nil? || (date + 600) < Time.now.to_i. - Pipes will only download data when requested externally, by a feed reader for example, which might be a lot less often than that 10 minute interval.

- It will remember the last 1000 hosts and wait 2 second before sending a new request to a host it just saw. That works via the combination of the LruRedux queue with a time-to-live of 2 seconds and the

while (@@limiter.key?(url.host))

But Reddit still sent a 429 response as soon as it saw Pipes requesting an RSS feed.

429 responses are a way for a server to tell a client to slow down. They can a also contain instructions on how long to wait. Reddit for example did set the retry-after header and filled it with a 7, telling us to wait 7 seconds before trying again. So we did just that in the new version of the downloader:

response = HTTParty.get(url)

if response.code == 429

if response.headers['retry-after'].to_i < 20

sleep response.headers['retry-after'].to_i

response = HTTParty.get(url)

result = response.body

else

result = ""

end

else

result = response.body

end

Whenever a server – that might be Reddit or a different site – responds with a 429 header and sets a reasonable retry-after limit, this code will wait before trying it one more time. If that second requests also fails it will give up.

There is a second change: HTTParty. That is a HTTP client as a ruby gem and an alternative to the open-uri method used before, the hope was that it will improve compatibility.

I made some more changes to accommodate Reddit. Instead of waiting a minimum of two seconds between requests to the same host the downloader would wait two or three seconds, via a two second sleep when the host was still in the queue. And at some point the downloader had code to specifically slow down when it saw that the requested URL lead to Reddit.

But nothing helped, the Reddit server was still not happy.

That is where the new Reddit block comes in. It does two things to reliably get a subreddit’s RSS feed:

- It uses the FOSS project RSS-Bridge to fetch and cache the requested feed

- That software is running on a different server than Pipes itself

The way RSS-Bridge requests Reddit’s RSS feed works, it seems to be slow and cached enough to not offend the server. And by using a different server with a different IP we make sure that additional requests Pipes might make do not add to the load RSS-Bridge causes, which reduces the danger that Reddit’s server places new limits on our RSS-Bridge instance.

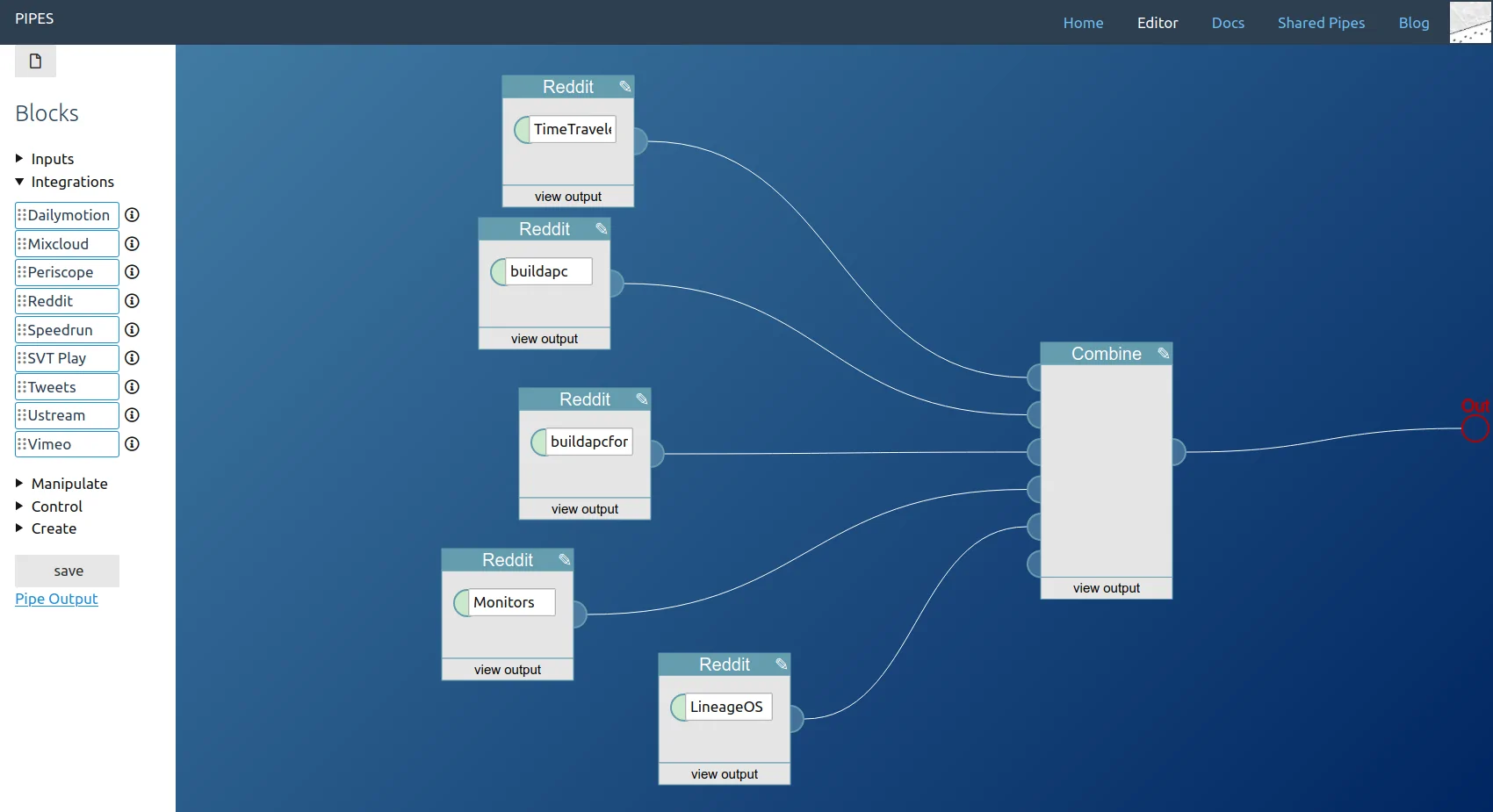

The Reddit block is a new addition to the integration menu of the editor that got added last month. In my testing the block proved to work reliably so far, even when a single pipe contained multiple Reddit feeds, as in this example with five random subreddits:

The improvements made to the downloader will remain active, they will help to reduce the load on other sites and to react better to future 429 responses.